I was not really used to projects being changed so often because of my previous internships, but since we were working on something new specifically for NASA missions/operations and not customers, the team had to put together the design criteria and objectives for the first time. However, I also think that is what made working at NASA so enjoyable, being able to work on new and revolutionary products even without a clear direction at times. There were also many intern events and tours put together that helped me get to know interns in other departments and made me appreciate the effort and acknowledgement put together by our mentors and the heads of the organization. I’m really thankful in general that I got to develop new skills and be surrounded by supportive and passionate peers and engineers.

Eyetracking Data

Damage Detection Task

Lessons Learned

Documentation

SenseGloves VR Haptic Gloves

NVIDIA RTX Dynamic Illumination

Throughout the internship, I also documented all of my progress, including common bugs during setup and running programs. I had access to some previous intern documentations that were very detailed and covered many possible errors, so that helped me a lot in formatting and including additional steps in my instructions. The previous documentation made me realize how time-saving and essential documenting previous work can be, especially being picked up by people who have never used the tools or programs before. Initially I tried to document each step as I was developing features, however, there were a couple of design changes midway so I just took some general and quick notes before going back to refine the documentation and styling. Given how challenging blueprints can be to read, I think it really helped to start off from small blocks of logic and building on top of it as well as explaining and understanding what each block and connection does in case any changes were to be made in the future.

I also helped another intern with setting up and integrating the SenseGloves Nova Gloves into a human factors assessment template made in Unity, which allows the team to quickly set up different scenarios and test cases in a zero G environment. The SenseGloves are haptic gloves designed for natural interactions in VR environments, allowing users to interact more realistically with objects such as being able to feel objects through their palms, experiencing the feelings of object sizes and stiffness and feeling impacts and vibrations. It was challenging to set up the SenseGloves from scratch but the previous intern who was working on them had very thorough instructions in his documentation which really helped in setting them up on a new computer.

One of the main issues we had was the inability of the Vive trackers to connect to the computer, thus not allowing the virtual hands to follow where the user was moving their hands. However, we realized that only the HTC Vive headset allowed the Vive trackers to work, not the Varjo headset we were initially using. We tried to make sure that SenseGloves could be integrated into the human factors assessment and work with different headsets and motion capture by using the OpenXR plugin instead.

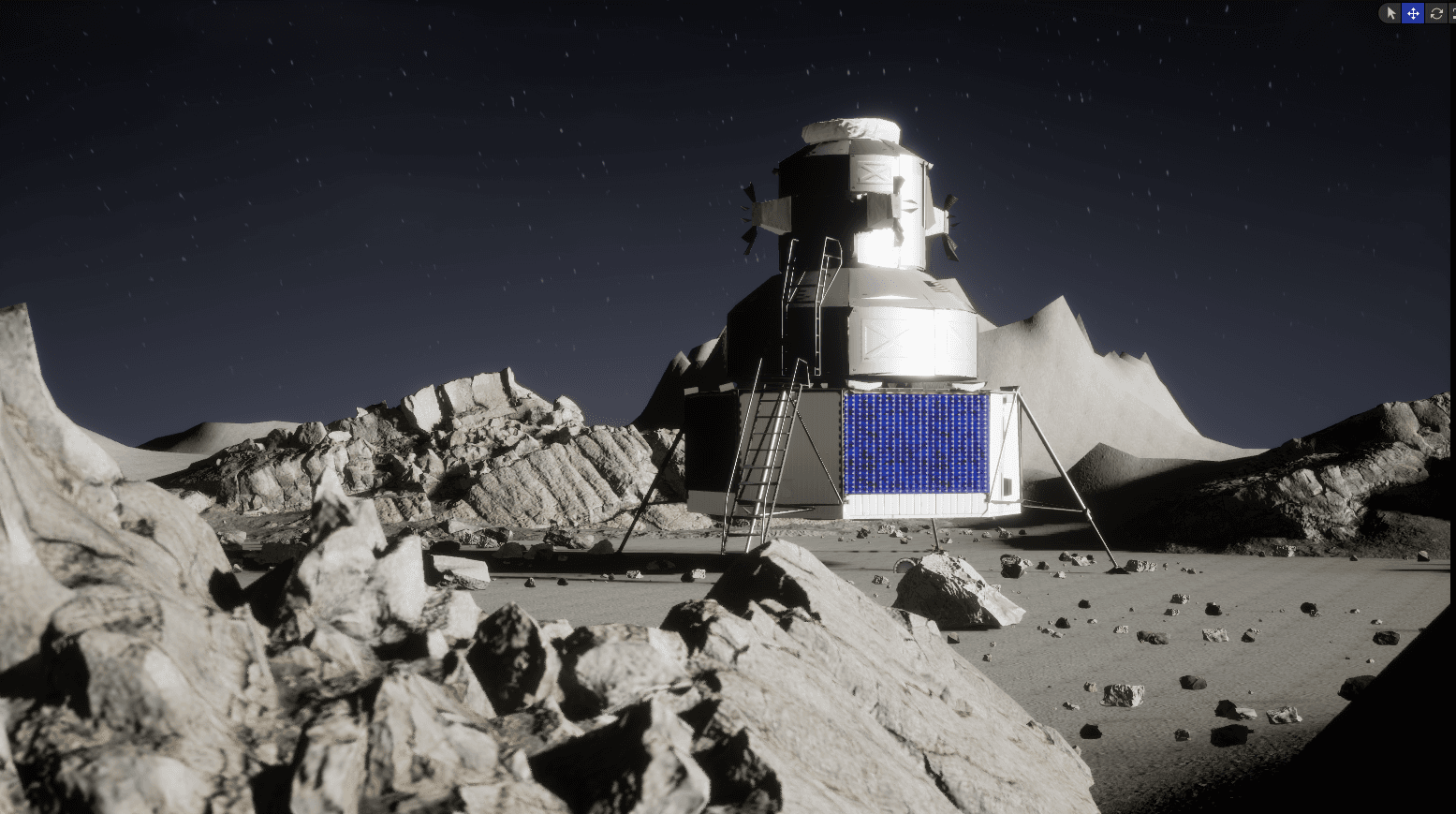

Another project I was working on was the NVIDIA RTXDI which included tools such as Dynamic Illumination which adds millions of dynamic lights to environments without much restraint on performance or resources. It accurately simulates how light interacts with objects in a scene, including reflections, shadows, and global illumination in a way that mimics real-world lighting. Our team wanted to utilize tools such as Dynamic Illumination to make the Lunar South Pole VR simulation lighting as realistic as possible.

One issue was the reflexivity of the metallic materials on the lunar lander model that wasn’t being captured by the natural lighting. Instead the metallic surfaces on the back of the lunar lander model were mostly dark when the surfaces should reflect off the light. Some of the shadows were also not accurately framed. Another issue was the long-term performance and resources of the VR simulation as the more that was being added into the projects, the more the visual quality declined in areas like the side of the screen and the moon surface under the lighting was becoming grainy. I looked into and explored NVIDIA RTXDI and DLSS (Deep Learning Super Sampling) in sample environments as a way to incorporate and fix those issues in the Lunar South Pole VR simulation.

In the few two weeks of the internship, I used many online resources from the Unreal website and videos to learn how to make environments and create custom textures, materials, landscapes and lighting using the Unreal engine. I was introduced to the fundamentals of blueprints which helped create custom materials and textures for tools I was creating such as the real time heatmap. For the heatmap tool, I learned how to draw materials directly to render target surfaces following the user’s movement and store the image. I was having trouble implementing the render target to a 3D surface in VR and couldn’t find any resources online to support this functionality, so the next approach I chose was creating a particle system. This involved learning how to create an after-image animation effect using the niagara particle system and creating skeletal meshes from static meshes for the animation to follow. Overall, I was able to develop a foundational structure to creating environments in Unreal while utilizing many tools such as blueprints, render targets and particle systems.

VR Simulation and Environment in Unreal

Human Factors Assessment and Analysis

Over the summer, I also helped the other interns and the team with different projects such as integrating haptic feedback gloves into a VR human factors assessment template in Unity, summarizing key takeaways from multiple team discussions, reorganizing the links and resources table and utilizing NVIDIA RTX DLSS (Deep Learning Super Sampling) to optimize ray tracing of dynamic lights. I really enjoyed working with the other interns and team over summer and gained a lot more insight into the real applications of virtual reality and human factors analysis which I hope to continue pursuing.

Additional Projects:

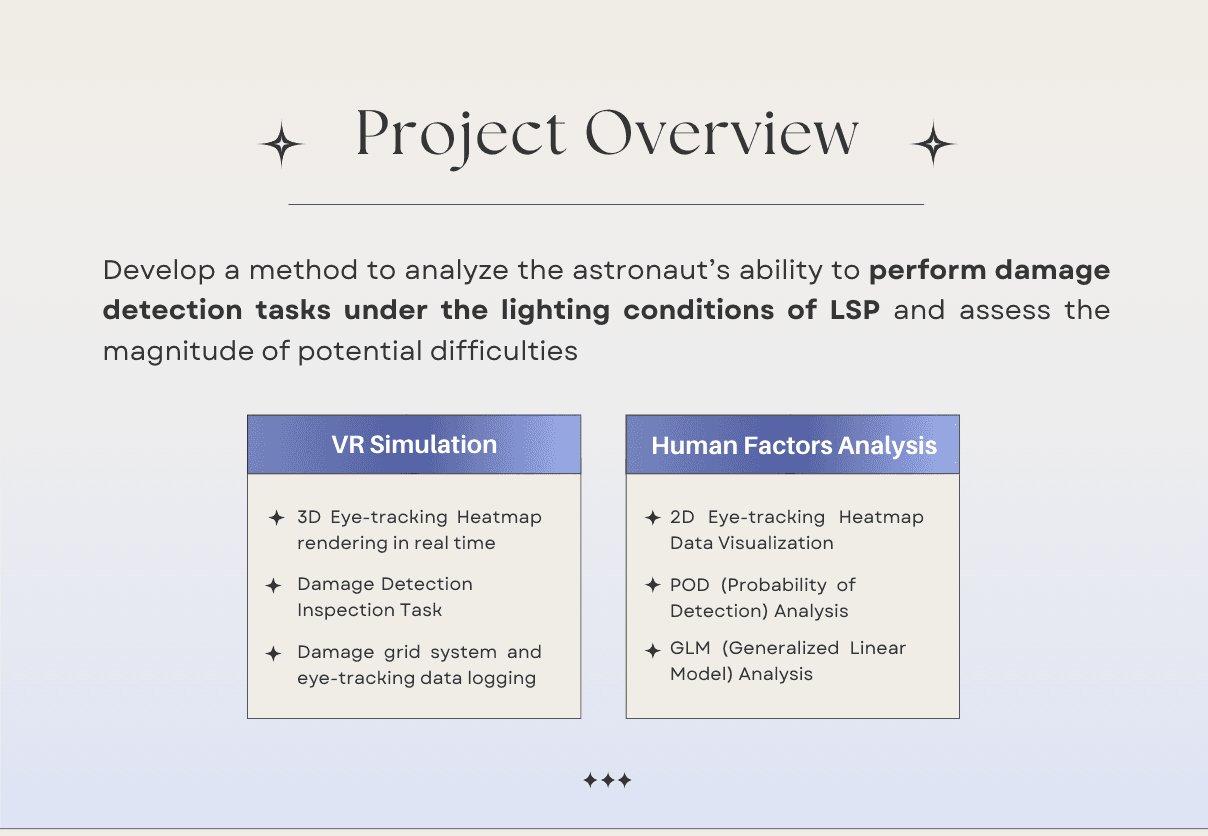

The motivation behind this project is that Artemis missions landing at the Lunar South Pole (LSP) will experience lighting conditions that differ greatly from any on Earth, and any seen during the Apollo program. At the LSP, the sun never crosses more than 1.5 degrees above the horizon and orbits the pole every 28 days. Without an atmosphere to diffuse light, the LSP has very long and dark shadows that are constantly changing. This poses high risks to LSP surface operations, for both hardware and astronauts. There are many vision-based tasks that will be performed at the LSP; vehicle ingress/egress, vehicle inspection, deployment/operation of payloads/hardware, sample identification/collection, etc.

The goal of the project is to analyze the astronaut’s ability to perform typical EVA tasks under the lighting conditions of LSP and assess the magnitude of potential difficulties they will face during exploration. To accomplish this goal, VR and physical simulations of the lunar lander and surrounding moon terrain will be created and placed under similar lighting conditions to the LSP. Assessments will be conducted in which participants will step into the simulation area and perform a set of tasks like those that astronauts are expected to perform on the moon.

The motivation behind this project is that Artemis missions landing at the Lunar South Pole (LSP) will experience lighting conditions that differ greatly from any on Earth, and any seen during the Apollo program. At the LSP, the sun never crosses more than 1.5 degrees above the horizon and orbits the pole every 28 days. Without an atmosphere to diffuse light, the LSP has very long and dark shadows that are constantly changing. This poses high risks to LSP surface operations, for both hardware and astronauts. There are many vision-based tasks that will be performed at the LSP; vehicle ingress/egress, vehicle inspection, deployment/operation of payloads/hardware, sample identification/collection, etc.

The goal of the project is to analyze the astronaut’s ability to perform typical EVA tasks under the lighting conditions of LSP and assess the magnitude of potential difficulties they will face during exploration. To accomplish this goal, VR and physical simulations of the lunar lander and surrounding moon terrain will be created and placed under similar lighting conditions to the LSP. Assessments will be conducted in which participants will step into the simulation area and perform a set of tasks like those that astronauts are expected to perform on the moon.

The motivation behind this project is that Artemis missions landing at the Lunar South Pole (LSP) will experience lighting conditions that differ greatly from any on Earth, and any seen during the Apollo program. At the LSP, the sun never crosses more than 1.5 degrees above the horizon and orbits the pole every 28 days. Without an atmosphere to diffuse light, the LSP has very long and dark shadows that are constantly changing. This poses high risks to LSP surface operations, for both hardware and astronauts. There are many vision-based tasks that will be performed at the LSP; vehicle ingress/egress, vehicle inspection, deployment/operation of payloads/hardware, sample identification/collection, etc.

The goal of the project is to analyze the astronaut’s ability to perform typical EVA tasks under the lighting conditions of LSP and assess the magnitude of potential difficulties they will face during exploration. To accomplish this goal, VR and physical simulations of the lunar lander and surrounding moon terrain will be created and placed under similar lighting conditions to the LSP. Assessments will be conducted in which participants will step into the simulation area and perform a set of tasks like those that astronauts are expected to perform on the moon.

Overall, I was really grateful for the opportunity to work on a variety of projects with real world applications at NASA MSFC this summer through Jacobs. Being surrounded by mentors and other interns who are very skilled and experienced in their fields fueled me to work harder to improve and offer valuable skills to the team’s projects. Helping other interns with their projects provided me with valuable insight on the topics in team discussions like developing a lunar depot and made it easier for me to speak up in those meetings. I also learned to take the initiative and set up meetings with mentors and other interns to make sure that we were all on the same page and on set to meet certain deadlines. It was slightly frustrating at times when certain design criteria or approaches were changed and all our previous work was not used anymore, but it helped to lean on the other interns and realize a lot of these decisions were out of our control.

Overall, I was really grateful for the opportunity to work on a variety of projects with real world applications at NASA MSFC this summer through Jacobs. Being surrounded by mentors and other interns who are very skilled and experienced in their fields fueled me to work harder to improve and offer valuable skills to the team’s projects. Helping other interns with their projects provided me with valuable insight on the topics in team discussions like developing a lunar depot and made it easier for me to speak up in those meetings. I also learned to take the initiative and set up meetings with mentors and other interns to make sure that we were all on the same page and on set to meet certain deadlines. It was slightly frustrating at times when certain design criteria or approaches were changed and all our previous work was not used anymore, but it helped to lean on the other interns and realize a lot of these decisions were out of our control.

At the end I also correlated the eye-tracking gaze data to the probability of detecting the damaged points, finding that there was a higher percentage of points or time at areas that have damages. In some cases, more time spent looking was correlated with how small the damage was as the smaller it becomes the longer it will take to detect it. The remaining aspects of this project that I did not get to completely finish because there are adjustments needed to be made to the lunar lander model are fitting the GLM model and conducting human factors assessments under different lighting conditions and on different areas of the lunar lander model.

At the end I also correlated the eye-tracking gaze data to the probability of detecting the damaged points, finding that there was a higher percentage of points or time at areas that have damages. In some cases, more time spent looking was correlated with how small the damage was as the smaller it becomes the longer it will take to detect it. The remaining aspects of this project that I did not get to completely finish because there are adjustments needed to be made to the lunar lander model are fitting the GLM model and conducting human factors assessments under different lighting conditions and on different areas of the lunar lander model.

After conducting the POD human factors assessments with several participants, the POD estimate was found to be 0.2416 in. The largest and smallest dent shown in the picture above is about 0.5 in and 0.1 in, which was not detected by most participants. This meant that this was the smallest size of dent that could theoretically be detected. Many tests I ran initially did not converge to the binary regression model because there were too many inconsistent points and not enough detected points. I met with my mentor to try and solve these issues and was suggested to plot the detected damage points from all the POD human factors assessments together. I initially thought that the POD estimates from all the different assessments would be fitted for the GLM analysis but I learned that the GLM analysis will be helpful to view the differences between assessments taken from different lunar lightning conditions and areas of the lunar lander model. I also learned that there are assumptions that the GLM has to follow such as linearity, homoscedasticity, normality and independence to accurately fit the model.

After conducting the POD human factors assessments with several participants, the POD estimate was found to be 0.2416 in. The largest and smallest dent shown in the picture above is about 0.5 in and 0.1 in, which was not detected by most participants. This meant that this was the smallest size of dent that could theoretically be detected. Many tests I ran initially did not converge to the binary regression model because there were too many inconsistent points and not enough detected points. I met with my mentor to try and solve these issues and was suggested to plot the detected damage points from all the POD human factors assessments together. I initially thought that the POD estimates from all the different assessments would be fitted for the GLM analysis but I learned that the GLM analysis will be helpful to view the differences between assessments taken from different lunar lightning conditions and areas of the lunar lander model. I also learned that there are assumptions that the GLM has to follow such as linearity, homoscedasticity, normality and independence to accurately fit the model.

After conducting the POD human factors assessments with several participants, the POD estimate was found to be 0.2416 in. The largest and smallest dent shown in the picture above is about 0.5 in and 0.1 in, which was not detected by most participants. This meant that this was the smallest size of dent that could theoretically be detected. Many tests I ran initially did not converge to the binary regression model because there were too many inconsistent points and not enough detected points. I met with my mentor to try and solve these issues and was suggested to plot the detected damage points from all the POD human factors assessments together. I initially thought that the POD estimates from all the different assessments would be fitted for the GLM analysis but I learned that the GLM analysis will be helpful to view the differences between assessments taken from different lunar lightning conditions and areas of the lunar lander model. I also learned that there are assumptions that the GLM has to follow such as linearity, homoscedasticity, normality and independence to accurately fit the model.

The POD (probability of detection) is a metric to measure what is the smallest size of a flaw, usually cracks or dents, that can be detected. The POD curve is the probability of detecting a flaw as a function of the size of that flaw. The mean POD curve is the solid line, where the probability of detecting a flaw approaches 1 (POD(a) = 1) as the flaw size increases. It becomes 1 after the POD estimate, where theoretically after that size all flaws are able to be detected and under that size is undetectable. The confidence interval of the POD curve is the dashed line that takes into account inaccuracies from the POD study. GLM (generalized linear model) takes POD points from multiple studies and fits them to a linear regression model.

The POD (probability of detection) is a metric to measure what is the smallest size of a flaw, usually cracks or dents, that can be detected. The POD curve is the probability of detecting a flaw as a function of the size of that flaw. The mean POD curve is the solid line, where the probability of detecting a flaw approaches 1 (POD(a) = 1) as the flaw size increases. It becomes 1 after the POD estimate, where theoretically after that size all flaws are able to be detected and under that size is undetectable. The confidence interval of the POD curve is the dashed line that takes into account inaccuracies from the POD study. GLM (generalized linear model) takes POD points from multiple studies and fits them to a linear regression model.

The POD (probability of detection) is a metric to measure what is the smallest size of a flaw, usually cracks or dents, that can be detected. The POD curve is the probability of detecting a flaw as a function of the size of that flaw. The mean POD curve is the solid line, where the probability of detecting a flaw approaches 1 (POD(a) = 1) as the flaw size increases. It becomes 1 after the POD estimate, where theoretically after that size all flaws are able to be detected and under that size is undetectable. The confidence interval of the POD curve is the dashed line that takes into account inaccuracies from the POD study. GLM (generalized linear model) takes POD points from multiple studies and fits them to a linear regression model.

For the damage detection task and data-logging in Unreal, I worked with the other interns and mentors to break down the lunar lander model into separate components, configure the new model with the damaged skin that has dents around 0.1 - 0.5 inches. and move that model into the main project. I also adjusted the CAD model of the grid that was supposed to be overlaid on top of the lunar lander model so that the user could select the damaged points at the accurate position with ray casting and log the ID of the grid, labeled A-N horizontally and 1-7 vertically. I conducted several human factors assessments for the damage detection task and saved the data-logged damage points selected by participants to perform POD (probability of detection) and GLM (generalized linear model) analysis.

For the damage detection task and data-logging in Unreal, I worked with the other interns and mentors to break down the lunar lander model into separate components, configure the new model with the damaged skin that has dents around 0.1 - 0.5 inches. and move that model into the main project. I also adjusted the CAD model of the grid that was supposed to be overlaid on top of the lunar lander model so that the user could select the damaged points at the accurate position with ray casting and log the ID of the grid, labeled A-N horizontally and 1-7 vertically. I conducted several human factors assessments for the damage detection task and saved the data-logged damage points selected by participants to perform POD (probability of detection) and GLM (generalized linear model) analysis.

For the damage detection task and data-logging in Unreal, I worked with the other interns and mentors to break down the lunar lander model into separate components, configure the new model with the damaged skin that has dents around 0.1 - 0.5 inches. and move that model into the main project. I also adjusted the CAD model of the grid that was supposed to be overlaid on top of the lunar lander model so that the user could select the damaged points at the accurate position with ray casting and log the ID of the grid, labeled A-N horizontally and 1-7 vertically. I conducted several human factors assessments for the damage detection task and saved the data-logged damage points selected by participants to perform POD (probability of detection) and GLM (generalized linear model) analysis.

I started off creating a gaze dot blueprint that would track the real-time user’s gaze as a fixed point, combining the gaze origin and gaze direction. I used a sphere to visualize the eye-tracking data initially to see if the gaze point was hitting an object and placing the point onto the object. The eye-tracking data is exported as a CSV file with x,y,z world coordinates and time which I plotted using python and matplotlib. I tried to use the density of the points to create a heatmap overlaid on top of the model that would convey how long and intensely a user was gazing at an area but there didn’t seem to be enough points generated each session to create sections of the heatmap that were adequately spaced out. To better visualize the eye-tracking gaze data as a heatmap, I utilized the gaze dot blueprint to create a particle system that would highlight points that the user looked longer at.

I started off creating a gaze dot blueprint that would track the real-time user’s gaze as a fixed point, combining the gaze origin and gaze direction. I used a sphere to visualize the eye-tracking data initially to see if the gaze point was hitting an object and placing the point onto the object. The eye-tracking data is exported as a CSV file with x,y,z world coordinates and time which I plotted using python and matplotlib. I tried to use the density of the points to create a heatmap overlaid on top of the model that would convey how long and intensely a user was gazing at an area but there didn’t seem to be enough points generated each session to create sections of the heatmap that were adequately spaced out. To better visualize the eye-tracking gaze data as a heatmap, I utilized the gaze dot blueprint to create a particle system that would highlight points that the user looked longer at.

I started off creating a gaze dot blueprint that would track the real-time user’s gaze as a fixed point, combining the gaze origin and gaze direction. I used a sphere to visualize the eye-tracking data initially to see if the gaze point was hitting an object and placing the point onto the object. The eye-tracking data is exported as a CSV file with x,y,z world coordinates and time which I plotted using python and matplotlib. I tried to use the density of the points to create a heatmap overlaid on top of the model that would convey how long and intensely a user was gazing at an area but there didn’t seem to be enough points generated each session to create sections of the heatmap that were adequately spaced out. To better visualize the eye-tracking gaze data as a heatmap, I utilized the gaze dot blueprint to create a particle system that would highlight points that the user looked longer at.